@felixmulder

DECONSTRUCTING DOTTY

A next generation Scala compiler

Felix Mulder

ABOUT ME

- github.com/felixmulder

- Graduated last winter from LTH

- Built the new 2.12.x Scaladoc

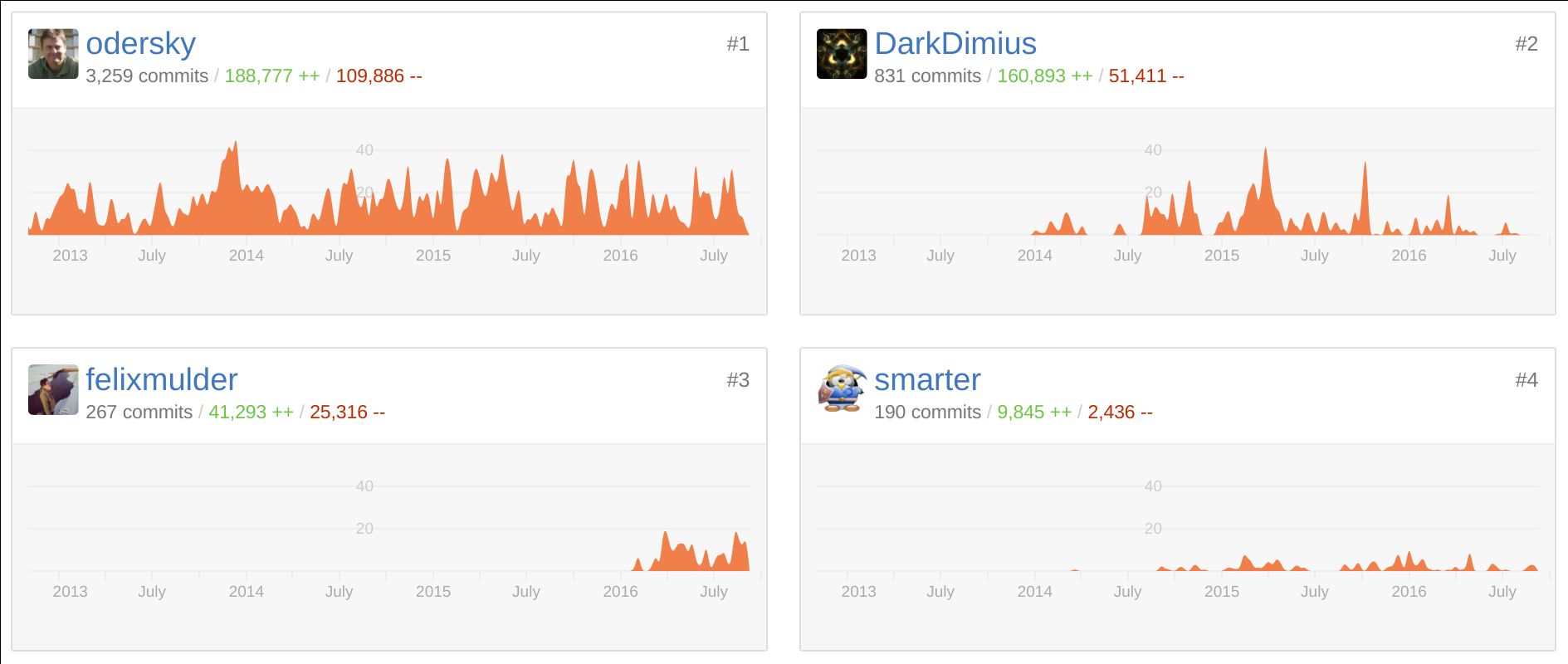

- Research fellow EPFL, working on Dottydoc at LAMP

- Compiler Engineer working on Dotty with Martin Odersky at LAMP

/end of bragging

/end of bragging

Scala

Who uses Scala?Camp I

“Scala is a better Java, type inference!”

Camp F

“Liek OMG, a monad’s just a monoid in the category of endofunctors”

“There is not agreement on what is ”best“, so all we can really do is try to find a local optimum. But finding that optimum is what drives me”

WHAT IS DOTTY?

- A compiler to try out new language and compiler concepts

- Developed at LAMP EPFL

- Nearing beta readiness

DOTTY’S FOCUS

- A proven sound foundation - DOT

- Focus on simplification

- Compilation speed

- Library defined optimizations

- Developer usability

KEY DIFFERENCES

- No more procedure syntax

- Union Types

- Intersection Types

- Trait Parameters

- TASTY

TASTY

- Pickling format

- Typed Trees

- Efficiently stored in bytecode

- Interop between binary incompatible compilers

Procedure Syntax

def foo {}

// error!

def foo = {}

// res: Unit-language:Scala2

Intersection and Union Types

A & Bis the greatest lower bound: a supertype of all subtypes of both A and BA | Bis the least upper bound: a subtype of all supertypes of both A and B

Union Types for Enums

case object Monday

case object Tuesday

case object Wednesday

case object Thursday

case object Friday

case object Saturday

case object Sunday

type Weekend = Saturday.type | Sunday.type

type Weekday = Monday.type | Tuesday.type...

type AnyDay = Weekday | WeekendPatternmatch exhaustivity checks

(Saturday: Weekend) match {

case Sunday => // incomplete!

}Intersection Types are the new `with`

trait A {

def foo: Int

}

trait B {

def bar: Int

}

def baz(ab: A & B) = ab.foo + ab.barTrait Parameters instead of Early Initializers

trait Super {

def x: String

println(x)

}

class Foo extends Super {

val x = "hello"

}

new Foo

// prints: nulltrait Super(x: String) {

println(x)

}

class Foo extends Super("hello")

new Foo

// prints: "hello"So now the fun stuff - Compiler internals

javac pipeline

- Parse

- Enter

- Annotate

- Attribue

- Flow

- Desugar

- Generate

dotty pipeline

source codeRegular Phases

Phase 1

Phase 2

Phase 3

Fused Phases

Phase 1

Phase 2

Phase 3

Let’s create a MiniPhase!

- A simplistic static linter

- This linter really cares about division by zero

So what do we need to do?

- Create a MiniPhase

- Add the Phase to Compiler

- Override the appropriate node transforms

Coding time!

So why aren’t we putting things like this in Dotty?

- Principle of least power

- Domain specific optimizations

- …maybe we have something more fitting?

Dotty-Linker to the rescue!

- Whole program optimization

- Call graph

- User defined rewrite rules

Reimplementing our linter

@rewrites object rules {

def customFancyWarning(x: Int | Double | ... | Numeric[_]) =

Warn(pattern = x / 0,

msg = "division by zero, you fool!")

}Why do we need an optimizer?

Scala provides:

- higher-order types

- generic methods

- generic classes

- multiple inheritance

- pattern matching

- lazy evaluation

- garbage collection

Example

public double average(int[] data) {

int sum = 0;

for(int i = 0; i < data.length; i++) {

sum += data[i];

}

return sum * 1.0d / data.length;

}def average(xs: Array[Int]) =

xs.reduce(_ + _) * 1.0 / xs.size| Java | Scala |

| 45 msec | 872 msec |

Java

public double average(int[] data) {

int sum = 0;

for(int i = 0; i < data.length; i++) {

sum += data[i];

}

return sum * 1.0d / data.length;

}- Range check

- Addition

- Index increment

Scala

def average(xs: Array[Int]) =

xs.reduce(_ + _) * 1.0 / x.sizedef reduce(op: Function2[Obj, Obj, Obj]): Obj = {

var first = true

var acc: Obj = null

this.foreach { e =>

if (first) {

acc = e

first = false

} else acc = op.apply(acc, e)

}

acc

}

def foreach(f: Funtion1[Obj, Obj]) {

var i = 0

val len = length

while (i < len) {

f.apply(this(i))

i += 1

}

}Compiler needs to lower generics

def plus[T](a: T, b: T)(implicit num: Numeric[T]): T =

num.plus(a, b)After getting rid of generics:

def plus(a: Object, b: Object, num: Numeric): Object =

num.plus(a, b)What does:

plus(1, 1)look like?

unbox(plus(box(1), box(1), intNum))Specialize all the things!

trait T[A1, A2, ..., An]

10 ^ n

trait T[A1, A2, ..., An] {

def foo[B1, B2, ..., Bm] = ???

}

10 ^ (n + m)

Existing solutions for Scala 2

@specialized- Miniboxing

Specialization

def foo[@specialized A](a: A) = ???int, long, short, byte, char, float, double, boolean, "void"+ 1 for reference types

== 10 versions

Specialization

- Mark types using

@specialized - Can specify for which types e.g:

@specialized(Int) - 10^n

- No inheritance

Miniboxing

- Reduces the factor to: 3^n

- Handles inheritance

trait Function3[-T1, -T2, -T3, +R]Both: how many specializations are needed?

- Specialization: 10000 classes

- Miniboxing: 81 classes

Are they asking the right question though?

Dotty Linker’s approach

Don’t ask the user what to specialize

- specialize on what’s actually being used.

Auto-Specialization in Dotty Linker

- Takes your program & libraries, analyzes how you use them

- Sees what instantiations are needed

- Specializes them

- No need to annotate types

def foo[T](x: Seq[T], id: Int) = x(id)- Type specialization removes boxing

- Term specialization removes virtual dispatch

Status

- Dotty

- http://github.com/lampepfl/dotty

- Example projects working, libraries are being ported

- User experience: nearing beta

- Dotty Linker

- http://github.com/dotty-linker/dotty

- Rewrite rules are being tested using property based testing

- Features being ported to Dotty

- User experience: alpha